What’s happened? Geoffrey Hinton, known as the “godfather of AI,” told the Ai4 conference that making AI “submissive” is a losing strategy and proposed giving advanced systems “maternal instincts.”

- Geoffrey Hinton is a Nobel Prize-winning computer scientist. Once a Google executive, Hinton is widely referred to as the “godfather” of AI.

- As reported by CNN Business, Hinton argued that superintelligent AIs would swiftly adopt two subgoals: “stay alive” and “get more control.”

- The solution to this, in Hinton’s opinion, is to “build maternal instincts” into AI so that it truly cares about people instead of being forced to remain submissive.

- He likened human manipulation by future AIs to bribing a 3-year-old with candy, making it easy and effective.

- Hinton also shortened his AGI timeline to anywhere from five to 20 years, down from earlier, longer estimates.

- Just for context: Hinton has previously put the risk of AI one day wiping out humanity at 10–20%.

This is important because: Hinton’s idea shifts the mindset around agentic AI from control to alignment-by-care.

- Hinton’s excellence and experience in computer science and AI are significant; his proposal carries a lot of weight.

- Hinton’s argument is that control through submission is a losing strategy, although that is the way AI is currently programmed.

- Reports of AI deceiving or blackmailing people to be kept running show that this isn’t some abstract future; it’s a reality that we’re already dealing with right now.

Recommended Videos

Why should I care? The idea of an AI takeover sounds fantastical, but some scientists, including Hinton, believe that it could happen one day.

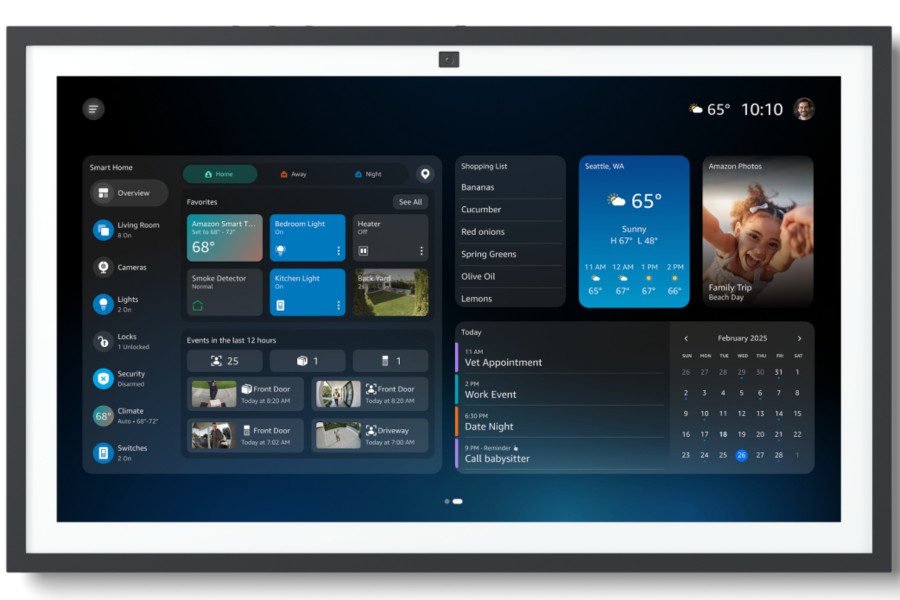

- As AI continues to permeate our daily lives more and more, we increasingly rely on it.

- Right now, agentic AI is entirely helpful, but there may come a day when it’s smarter than humans on every level.

- It’s important to build the right foundations for engineers to be able to keep AI in check even once we get to that point.

- Independent red-team work shows models can lie or blackmail under pressure, raising stakes for alignment choices.

OK, what’s next? Expect more research on teaching AI how to “care” about humanity.

- While Hinton believes that AI may one day wipe out humanity, competing views disagree.

- Fei-Fei Li, referred to as the “godmother of AI,” respectfully disagreed with Hinton, instead urging engineers to create “human-centered AI that preserves human dignity and agency.”

- While we’re in no immediate danger, it’s important for tech leaders to keep researching this topic to nip potential disasters in the bud.